Matthias Klumpp: Update notifications in Debian Jessie

Piwik told me that people are still sharing my post about the state of GNOME-Software and update notifications in Debian Jessie.

So I thought it might be useful to publish a small update on that matter:

UPDATE: It appears that many people have problems with getting update notifications in GNOME on Jessie. If you are affected by this, please try the following:

- If you are using GNOME or KDE Plasma with Debian 8 (Jessie), everything is fine you will receive update notifications through the GNOME-Shell/via g-s-d or Apper respectively. You can perform updates on GNOME with GNOME-PackageKit and with Apper on KDE Plasma.

- If you are using a desktop-environment not supporting PackageKit directly for example Xfce, which previously relied on external tools you might want to try pk-update-icon from jessie-backports. The small GTK+ tool will notify about updates and install them via GNOME-PackageKit, basically doing what GNOME-PackageKit did by itself before the functionality was moved into GNOME-Software.

- For Debian Stretch, the upcoming release of Debian, we will have gnome-software ready and fully working. However, one of the design decisions of upstream is to only allow offline-updates (= download updates in the background, install on request at next reboot) with GNOME-Software. In case you don t want to use that, GNOME-PackageKit will still be available, and so are of course all the CLI tools.

- For KDE Plasma 5 on Debian 9 (Stretch), a nice AppStream based software management solution with a user-friendly updater is also planned and being developed upstream. More information on that will come when there s something ready to show ;-).

UPDATE: It appears that many people have problems with getting update notifications in GNOME on Jessie. If you are affected by this, please try the following:

- Open dconf-editor and navigate to org.gnome.settings-daemon.plugins.updates. Check if the key active is set to true

- If that doesn t help, also check if at org.gnome.settings-daemon.plugins.updates the frequency-refresh-cache value is set to a sane value (e.g. 86400)

- Consider increasing the priority value (if it isn t at 300 already)

- If all of that doesn t help: I guess some internal logic in g-s-d is preventing a cache refresh then (e.g. because I thinks it is on a expensive network connection and therefore doesn t refresh the cache automatically, or thinks it is running on battery). This is a bug. If that still happens on Debian Stretch with GNOME-Software, please report a bug against the gnome-software package. As a workaround for Jessie you can enable unconditional cache refreshing via the APT cronjob by installing apt-config-auto-update.

Maybe setting up a BOF would also be a good idea.

Maybe setting up a BOF would also be a good idea.

DebConf15 will be held in Heidelberg, Germany from the 15th to the 22nd of

August, 2015. The clock is ticking and our annual conference is approaching.

There are less than three months to go, and the Call for Proposals period

closes in only a few weeks.

This year, we are encouraging people to submit half-length 20-minute events,

to allow attendees to have a broader view of the many things that go on in the

project in the limited amount of time that we have.

To make sure that your proposal is part of the official DebConf schedule you

should submit it before June 15th.

If you have already sent your proposal, please log in to summit and make sure

to improve your description and title. This will help us fit the talks into

tracks, and devise a cohesive schedule.

For more details on how to submit a proposal see:

DebConf15 will be held in Heidelberg, Germany from the 15th to the 22nd of

August, 2015. The clock is ticking and our annual conference is approaching.

There are less than three months to go, and the Call for Proposals period

closes in only a few weeks.

This year, we are encouraging people to submit half-length 20-minute events,

to allow attendees to have a broader view of the many things that go on in the

project in the limited amount of time that we have.

To make sure that your proposal is part of the official DebConf schedule you

should submit it before June 15th.

If you have already sent your proposal, please log in to summit and make sure

to improve your description and title. This will help us fit the talks into

tracks, and devise a cohesive schedule.

For more details on how to submit a proposal see:

KDE

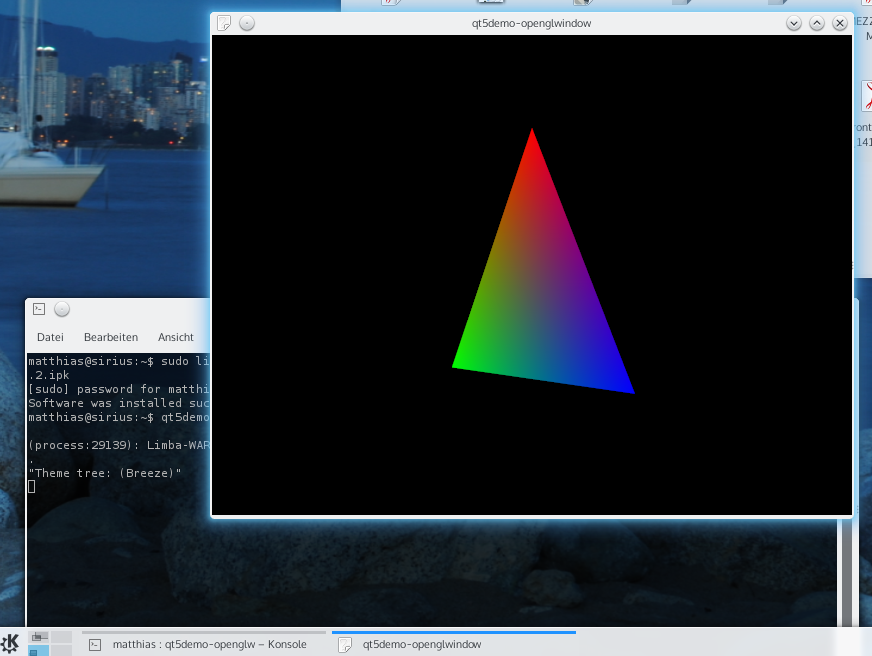

We will ship with at least KDE Applications 4.13, maybe some 4.14 things as well (if we are lucky, since Tanglu will likely be in feature-freeze when this stuff is released). The other KDE parts will remain on their latest version from the 4.x series. For Tanglu 3, we might update KDE SC 4.x to KDE Frameworks 5 and use Plasma 5 though.

GNOME

Due to the lack manpower on the GNOME flavor, GNOME will ship in the same version available in Debian Sid maybe with some stuff pulled from Experimental, where it makes sense. A GNOME flavor is planned to be available.

Common infrastructure

We currently run with systemd 208, but a switch to 210 is planned. Tanglu 2 also targets the X.org server in version 1.16. For more changes, stay tuned. The kernel release for Bartholomea is also not yet decided.

Artwork

Work on the default Tanglu 2 design has started as well any artwork submissions are most welcome!

Tanglu joins the OIN

The Tanglu project is now a proud member (licensee) of the

KDE

We will ship with at least KDE Applications 4.13, maybe some 4.14 things as well (if we are lucky, since Tanglu will likely be in feature-freeze when this stuff is released). The other KDE parts will remain on their latest version from the 4.x series. For Tanglu 3, we might update KDE SC 4.x to KDE Frameworks 5 and use Plasma 5 though.

GNOME

Due to the lack manpower on the GNOME flavor, GNOME will ship in the same version available in Debian Sid maybe with some stuff pulled from Experimental, where it makes sense. A GNOME flavor is planned to be available.

Common infrastructure

We currently run with systemd 208, but a switch to 210 is planned. Tanglu 2 also targets the X.org server in version 1.16. For more changes, stay tuned. The kernel release for Bartholomea is also not yet decided.

Artwork

Work on the default Tanglu 2 design has started as well any artwork submissions are most welcome!

Tanglu joins the OIN

The Tanglu project is now a proud member (licensee) of the